在上一篇文章,我们介绍了如何申请微软Azure OpenAI服务,创建了GPT-3.5-turbo模型,获取到了API Key。 在本篇文章中,我们将从Go语言开始调用Azure OpenAI API。

开始之前

- 申请Azure OpenAI服务

- 创建GPT-3.5-turbo模型 或更高

- 获取到API Key

- 获取到Endpoint

官方Demo

创建名为 chat_completions.go 的新文件。 将以下代码复制到 chat_completions.go 文件中。

package main

import (

"context"

"fmt"

"log"

"os"

"github.com/Azure/azure-sdk-for-go/sdk/ai/azopenai"

"github.com/Azure/azure-sdk-for-go/sdk/azcore/to"

)

func main() {

azureOpenAIKey := os.Getenv("AZURE_OPENAI_KEY")

//modelDeploymentID = deployment name, if model name and deployment name do not match change this value to name chosen when you deployed the model.

modelDeploymentID := "gpt-35-turbo"

// Ex: "https://<your-azure-openai-host>.openai.azure.com"

azureOpenAIEndpoint := os.Getenv("AZURE_OPENAI_ENDPOINT")

if azureOpenAIKey == "" || modelDeploymentID == "" || azureOpenAIEndpoint == "" {

fmt.Fprintf(os.Stderr, "Skipping example, environment variables missing\n")

return

}

keyCredential, err := azopenai.NewKeyCredential(azureOpenAIKey)

if err != nil {

// TODO: Update the following line with your application specific error handling logic

log.Fatalf("ERROR: %s", err)

}

client, err := azopenai.NewClientWithKeyCredential(azureOpenAIEndpoint, keyCredential, nil)

if err != nil {

// TODO: Update the following line with your application specific error handling logic

log.Fatalf("ERROR: %s", err)

}

// This is a conversation in progress.

// NOTE: all messages, regardless of role, count against token usage for this API.

messages := []azopenai.ChatMessage{

// You set the tone and rules of the conversation with a prompt as the system role.

{Role: to.Ptr(azopenai.ChatRoleSystem), Content: to.Ptr("You are a helpful assistant.")},

// The user asks a question

{Role: to.Ptr(azopenai.ChatRoleUser), Content: to.Ptr("Does Azure OpenAI support customer managed keys?")},

// The reply would come back from the Azure OpenAI model. You'd add it to the conversation so we can maintain context.

{Role: to.Ptr(azopenai.ChatRoleAssistant), Content: to.Ptr("Yes, customer managed keys are supported by Azure OpenAI")},

// The user answers the question based on the latest reply.

{Role: to.Ptr(azopenai.ChatRoleUser), Content: to.Ptr("Do other Azure AI services support this too?")},

// from here you'd keep iterating, sending responses back from the chat completions API

}

resp, err := client.GetChatCompletions(context.TODO(), azopenai.ChatCompletionsOptions{

// This is a conversation in progress.

// NOTE: all messages count against token usage for this API.

Messages: messages,

Deployment: modelDeploymentID,

}, nil)

if err != nil {

// TODO: Update the following line with your application specific error handling logic

log.Fatalf("ERROR: %s", err)

}

for _, choice := range resp.Choices {

fmt.Fprintf(os.Stderr, "Content[%d]: %s\n", *choice.Index, *choice.Message.Content)

}

}

现在打开命令提示符并运行:

go mod init chat_completions.go

go mod tidy

go run chat_completions.go

说实话官方这段代码我粘贴过来直接报错,有些api提示不存在,咱也不知道问题出在哪,所以就没用到官方的Demo。

第三方库 sashabaranov/go-openai

package main

import (

"context"

"fmt"

"github.com/sashabaranov/go-openai"

)

func main() {

azureOpenAIKey := "yourAPIKey"

azureOpenAIEndpoint := "yourEndpoint"

azureOpenAIModelName := "gpt-3.5-turbo-1106" // your model name

azureOpenAIDeploymentName := "gpt-35-1106" // your deployment name

config := openai.DefaultAzureConfig(azureOpenAIKey, azureOpenAIEndpoint)

config.AzureModelMapperFunc = func(model string) string {

azureModelMapping := map[string]string{

azureOpenAIModelName: azureOpenAIDeploymentName,

}

return azureModelMapping[azureOpenAIModelName]

}

client := openai.NewClientWithConfig(config)

resp, err := client.CreateChatCompletion(

context.Background(),

openai.ChatCompletionRequest{

Model: azureOpenAIModelName,

Messages: []openai.ChatCompletionMessage{

{

Role: openai.ChatMessageRoleUser,

Content: "请解释下什么是解剖学,请回答在30个词之内",

},

},

},

)

if err != nil {

fmt.Printf("ChatCompletion error: %v\n", err)

return

}

fmt.Println(resp.Choices[0].Message.Content)

}

- azureOpenAIKey 申请获得的api key

- azureOpenAIEndpoint 跟api key同一页面下获取到endpoint,url地址

- azureOpenAIModelName 模型名称 gpt-3.5-turbo / gpt-3.5-turbo-1106 / gpt-4-32k 等等

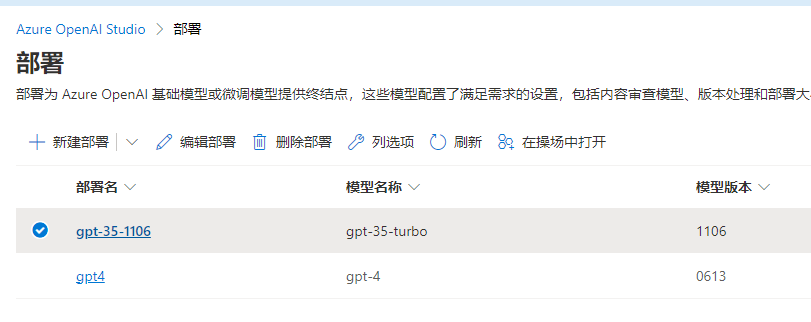

- azureOpenAIDeploymentName 你部署模型的名称,如下图所示

# 输出

解剖学是研究动植物体内部结构和组织的科学,包括器官、系统和组织之间的相互关系和功能。

总结

虽然官方提供了比较详细的Demo,但我也没想到不能用,好在第三方库提供了比较方便的API。Azure OpenAI可以在国内调用,按量收费,可以设置配额上限,自由选择模型,总体来说完美替代了OpenAI服务。